Why you should prefer Metaprogramming over Compile-Time Execution

On the problem with complex languages, toolchains, ecosystems, and why metaprogramming is superior to compile-time execution.

Recently, there has been some discussion regarding compile-time execution (CTE) in C and other languages on X. The conversation mostly boils down to the following: should a language have built-in features for CTE (generics, templates, macros, preprocessors, etc), or should this be accomplished by having a user-level metaprogram parse and process the files? This question and the title of this post are a little misleading. Metaprogramming is a way for you to do compile-time execution. What I mainly want to focus on is whether this should be a built-in feature or a userspace program. The purpose of this post is to explain why I prefer the latter.

1. It makes the language more complicated

Introducing more features to a language makes it larger and more complicated. Larger languages are harder to support, harder to maintain, and harder to fully understand. They also make it much more difficult for people to write tools that truly support them. For example, the latest C specification1 is about 500 pages and 240,000 words. On the other hand, the latest C++ specification2 is over 1,800 pages and 770,000 words. If you’re a C++ programmer, have you read all—or even most—of those 1,800 pages? Does your editor, compiler, or debugger handle every obscure edge case buried in those half a million words? Probably not.

People may still argue:

Yeah, but that’s good. You can choose the needed set of features in C++ and only pay when you use it—at compile time or runtime.

No, you’re wrong. EVERYONE has to pay for it. C++ compilers are way slower, even when compiling standard C code. Not only that, all the external tooling is extremely bloated and brittle. And because everyone supports a different subset and never the full set (because it’s intrinsically too complex), these gigantic toolchains have a significantly low level of composability.

But hey, at least you’re not a web developer.

The total word count of the W3C specification catalogue is 114 million words at the time of writing. If you added the combined word counts of the C11, C++17, UEFI, USB 3.2, and POSIX specifications, all 8,754 published RFCs, and the combined word counts of everything on Wikipedia’s list of longest novels, you would be 12 million words short of the W3C specifications.

I conclude that it is impossible to build a new web browser. The complexity of the web is obscene. The creation of a new web browser would be comparable in effort to the Apollo program or the Manhattan project.

-Drew DeVault, The reckless, infinite scope of web browsers

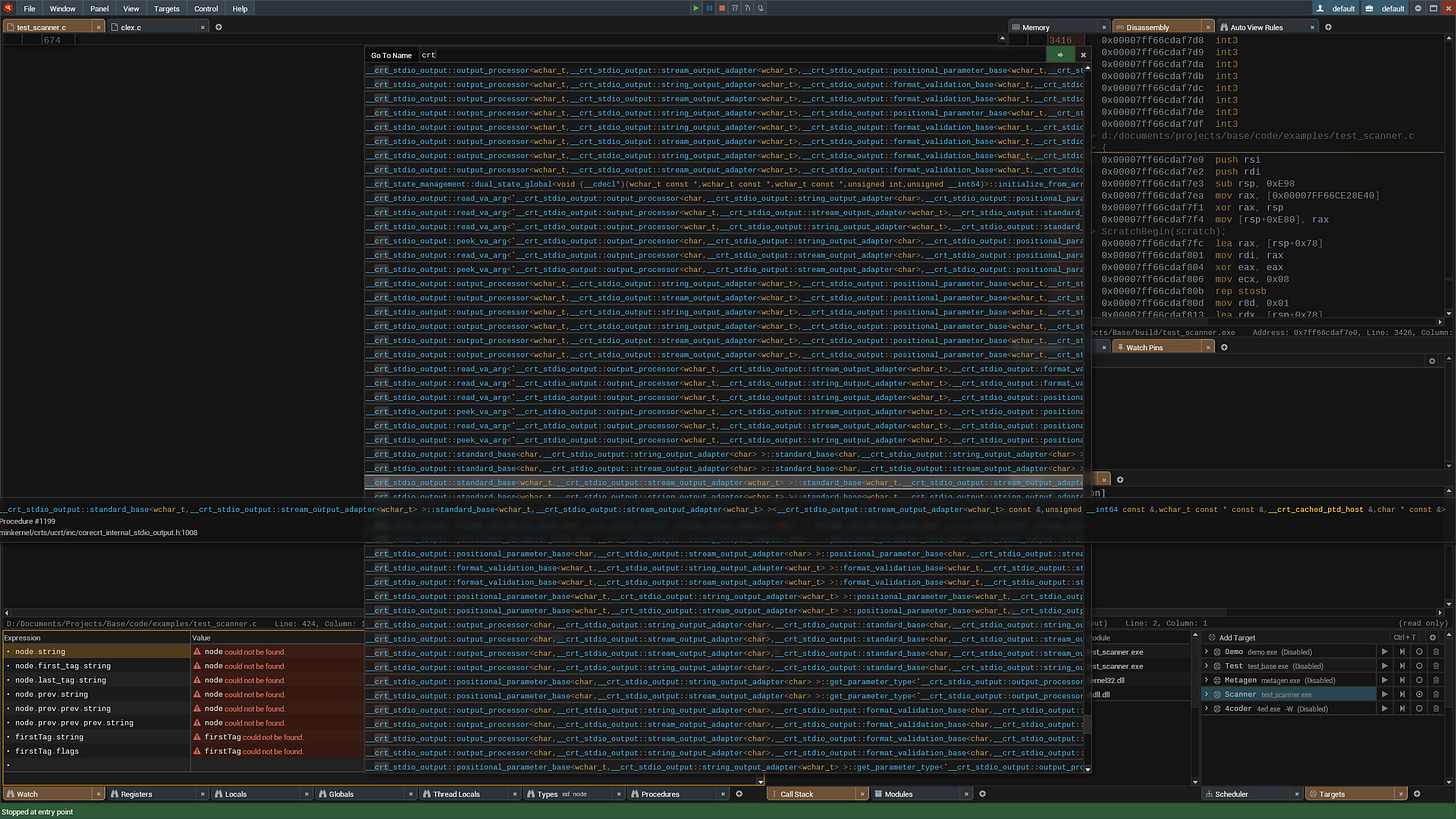

Lucky for us, C programmers only need to deal with this3:

2. Runtime code vs Compile-time code

Now there are two different types of code that you can write in the language: compile-time and runtime. In C or C++, features like templates or preprocessing have completely different syntaxes/semantics from normal code. They are practically two separate languages that the user must now learn. If your job is to write a parser to implement syntax highlighting for a code editor, you could see how annoying it is to write and maintain two different parsers. Newer languages (JAI, Nim, Zig, etc) choose a different design where CTE uses the same syntax as its runtime counterpart. Pretty much anything you can do at runtime is now possible at compile time. Problem solved, right? You only need to write a single parser and learn a single language. Most people, including me, would say that this is much better. Sadly, the problem lies in the ‘Pretty much’ part. Just because these languages design CTE to be similar to runtime execution doesn’t necessarily mean they are the same.

Have you checked from a first principles perspective that all of the language's features work and make sense in both cases? Do the differences between each version confuse or misguide the user? One common example of this is machine instructions. Is the "bytecode/JIT" platform agnostic or trying to emulate a real platform? Can users still use different intrinsics in the compile-time code? If your language has inline assembly, is it still usable in CTE?

Another example is pointers. Due to ASLR, pointers used in compile-time code differ from those in runtime code. For example, if you store some pointers (or function pointers, arrays, AST nodes) in a table, attempting to dereference them later in runtime code would result in a crash. You might argue that the same problem can arise in the metaprogram case. Yes, but because these are separate programs, most programmers already inherently understand their different boundaries, and no one ever confuses or accidentally sends pointers between processes. Not to mention, in many metaprogramming pipelines, normal code doesn’t even know if a metaprogram exists in the first place. Having separate metaprograms also means that the only reliable way of IPC between them, your code, and other tools (compilers, editors, VCSs) is the file system and CLI.

3. Less control over what gets compiled and when

Using a metaprogram means controlling when to compile and when to run it separately. Hitting F1 compiles the meta code, F2 runs the metaprogram, and F3 executes both, for example. Maybe F4 regenerates all the asset metadata, while F5 compiles the normal code using the generated code. I can control how many meta passes to run and specify which directory or file extensions to apply it to. The metaprogram might cache each file and only run when specific changes are made, or it could work with my version control system to automatically run on diffs only4.

If this is built into the language, I won’t be able to choose or configure when the meta code is compiled and run. It must always be recompiled and run simultaneously, alongside the normal code, every time there’s a single change in either the meta or normal code. Users are forced into the “Compile meta code → Run meta code → Compile normal code” cycle, every time they hit the build button. The compiler could cache the code and compile incrementally to lessen the pain. However, this would increase the workload for compiler vendors and make it harder for people to adopt your language.

4. Performance

The metaprogram is compiled directly into native code, whereas CTE is typically transformed into bytecode and interpreted by the compiler. This means there's a difference in speed between the two. There is also a difference in complexity between a normal compiler and a compiler that also needs to interpret some of its code, leading to the previously mentioned tooling problem.

Another opportunity for performance gains is generating different code for different target platforms. With metaprogramming, not only can the compiled metaprogram run faster by using native instructions, but your actual application can also gain considerable speed from target-specific generated code. The compiler could offer a similar feature, but before doing so, it would need to answer the earlier “platform-agnostic or real-platform” question. Overall, the metaprogramming route is more flexible and gives you greater control.

5. Debuggability

Because the metaprogram is just a normal executable, it’s debuggable. Additionally, because you control how many passes and in which order these metaprograms run, you can also choose which ones to be compiled in debug mode and which don’t. You can also write test cases, feed them specific test inputs, or perform fuzz testing.

Interpreted bytecode, on the other hand, can’t be trivially debugged. In theory, JIT compilation could solve the problem. If a debugger established a standard—say, by providing a single header-only library for emitting special debug messages—that allowed JITted code to specify its own debug information (similar to how Windows signals a DLL load via a debug event), that code then could be both debuggable and run at native speed5. In practice, however, no modern language is doing this. Implementing it well also demands enormous effort from compiler and debugger vendors, which brings us right back to the same old tooling problem.

6. Inspectability

Debugging is easier not only for the metaprogram but also for the generated code. Everybody probably has at least one experience where they're pulling their hair out trying to figure out why some templates or macros fail to compile while reading 200+ error messages. A friend once showed me a failed compilation from his industrial C++ code—the error output was about 70,000 characters long. Good luck.

People also pull their hair out while trying to understand the metacode. Rather than 200+ lines of error messages, you now get 200+ lines of C++ heavy-template code. Understanding the type of a single variable can mean traversing a sprawling graph of typenames and inheritances. Each additional layer of class or typename increases the cognitive load on your brain. Most of the time, you feel like C++’s type system and metaprogramming features are working against you rather than for you (Welcome to the club).

Generics, templates, macros, preprocessors, and other CTE features suck at this because I can never inspect the final code directly (TBF with preprocessing, you can ask the compiler to expand them for you). In contrast, because my metaprogram outputs normal functions and types, I can easily inspect it and know what went wrong. Is the input wrong, is there a case the metaprogram doesn't handle, or is the generated output being misused?

When the final code isn't visible, compilers can't report errors accurately, code editors can't color syntax and jump to definition trivially, and debuggers can’t display richer visualization (unless you encode substantially more in the debug format). While many of these issues can be minimized, doing so demands substantial effort from all these different tools.

7. Did I mention the tooling problem?

Debuggers, editors, static analyzers, and so on are what make the ecosystem usable—dramatically more so than the language frontend. You want them to be easier to make. Not harder. The language is irrelevant.

-Ryan Fleury

The most important aspect of a language is its ecosystem—its libraries, frameworks, debuggers, IDEs, code editors, static analyzers, educational materials, documentation, and communities. Programs that are written in it and for it. Text that is written by users and for users. The more tools a language has, the easier it is to use. The simpler a language is, the more tools are built for it.

Writing a parser for C++ is harder than for C. Writing a debugger for C++ is harder than for C. Writing a compiler for C++ is harder than for C. Because C is smaller and simpler than C++, developing tools for it is easier, more complete, and of higher quality. As I’ve mentioned before, adding more features to a language inevitably makes it larger and more complex, discouraging people from creating tools for it. The C ecosystem is a good example. Part of it is due to historical reasons, and part is because C is a relatively simple language (even with all of its quirks), but C has stood the test of time when it embeds itself in millions of codebases, and—unlike those new languages with a single compiler that mostly rely on LLVM and an ecosystem accounting for at most 5% of C’s, C has numerous compilers, backends, libraries, and tools built around it (for better or worse).

Simple toolchains maximize the amount of decentralized and independent software. Complicated toolchains, on the other hand, minimize this to a small, locked-down set of extremely bloated and highly controlled software. This, in turn, not only leads to less competition and cooperation but also makes your software less portable and self-reliant, because you must now depend on a small amount of unmanageably large, unfathomably slow, tightly controlled software, often with an unreadable legacy codebase in the rare case that you have access to the source code. The same applies to languages, formats, protocols, standards, and so on. Each of these layers adds more complexity that everyone must deal with. Developing software is already hard; handling these added complexities makes it harder. Unnecessary complexity is the root of all evil.

The more complexity we put into our system, the less likely we are to survive a disaster. We’re acting right now like we believe that the upper limit of what we can handle is an infinite amount of complexity, but I don’t think that makes any sense, so what’s the upper limit? How would we decide how much complexity we can handle?

-Jonathan Blow, Preventing the Collapse of Civilization

What should you do now?

After reading this, I hope I have at least convinced some of you of the benefits of having a userspace metaprogram. Contrary to popular belief, you don’t need to go looking for new programming languages to have CTE. Metaprogramming can be done in any language, and learning to do so will be more beneficial to you. Why wait for your language to have some features when you can implement them yourself? Why succumb to the decision of some committees that are not in control of your codebase whatsoever? As for aspiring language designers, I hope you reconsider adding these features. Depending on your context, shipping a parsing library as part of the standard library, alongside some examples, might be the better choice.

“But how can I do metaprogramming? What does your metaprogramming workflow look like?“ intrigued readers might wonder. Do I hand-roll a custom parser, use a third-party one, or rely on simple text substitution? How do I set up my metaprogramming pipeline? Is it single-pass, multi-pass, preprocessing, or postprocessing? Do I use C only or combine different languages? Maybe C for engine code, while Lua for gameplay code? What do I use it for? Is it for generating header files, tables, and documentation? Maybe it’s for type information, data structures, or asset metadata?

All these questions—plus more—will be addressed in the next post. I’ll explore different techniques I’ve discovered, rules/constraints I follow, and various use cases for metaprogramming. In the meantime, while waiting for that award-winning article, I recommend checking out Ryan Fleury’s Table-Driven Code Generation. His approach to metaprogramming is quite interesting and something I’ll discuss in great detail. You can also explore his RAD Debugger codebase, where he applied this technique to real-world use cases.

Frequently Asked Questions

Until then, let's address a couple of arguments I frequently heard—starting with the claim that hand-rolling a parser is hard. People may say:

It’s hard to make a complete type-aware parser for C. What if there's typedef to make type Y an alias for X? How about nested type’s declaration inside a function? Have you accounted for anonymous members at all?

These arguments—often from those less experienced with writing parsers or metaprogramming systems—actually prove my earlier point about complex languages: you’re right. Because C is more complicated than it needs to be, writing tools (in this case, parsers) for it becomes harder, so you don’t want to do it. You finally get it!

That said, while C has many quirks that make parsing it more difficult than necessary, it remains relatively simple—especially compared to C++. Your metaprogram can still perform basic C parsing to extract useful information about functions, types, or custom patterns you define. The issue isn’t that you’re limited to textual substitution only, forced to rely on a third-party "military-grade" parser, or required to handcraft a fully spec-compliant one. You can always decide how powerful and complete your parser needs to be and which subset of C your metaprogram should handle. If you don’t need information about nested type declarations, you can simply ignore them. We’re just gonna do as little as we have to, to do the work.

By their nature, third-party parsers must handle all possible combinations of every feature of a language in the most generic way possible, which won’t fit your needs. Blindly using a generic parser, without even considering looking behind the curtains, means that you voluntarily put more complexity on yourself. This complexity often results in longer compile times, more complicated build steps, larger code footprints, slower and less responsive software, and a more challenging integration process. That’s why I recommend writing a simple parser and a reusable helper layer.

Another argument that people often make is:

The performance and debuggability points do not apply to Lisp (or language X). There’s this Lisp compiler that compiles directly to machine code and doesn’t have an interpreter. Expansion can be traced and your macro code is debuggable.

True. Theoretically speaking, every single point mentioned above can be addressed, except for the first and last ones. You can have debuggers that work with JITed code or compilers for interpreted languages. However, doing so raises the bar for features and complexity across all tools. That's my main concern. You can have all the debuggers, editors, analyzers, and compilers for any language that you want, to fix as many language problems as you have. But the reality is that most people are unwilling to support and write tools for it. Citing older, simpler languages like Lisp or C only proves my point. And practically speaking, you can do CTE with metaprogramming in any language by simply using a metaprogram to preprocess your code—so why complicate things?

People also asked:

C doesn’t have any metaprogramming features, so I can understand why you’d have to implement your own. C++ templates are poorly designed and implemented, so I also get why you might prefer something else. But what about the newer systems programming languages—like JAI, Odin, Zig, Nim, D, or Rust—that already have built-in metaprogramming features? What’s wrong with preferring those over hand-rolling your own metaprogramming pipeline, or even using them to assist in the process?

You’re correct: a big reason why I prefer metaprogramming is that C doesn’t have any. If C had any well-designed feature, I would have used it. An example of this is the preprocessor. I’m not claiming that it’s well-designed, but because it exists and is well-established—I do use it to some extent, both in isolation and in combination with metaprogramming.

However, the reason I—and so many others—use C is due to its ecosystem. If you went back in time and made the language more complex by adding more features, would people still want to adopt it? I don’t know, nor do I care about these what-if scenarios.

The Future

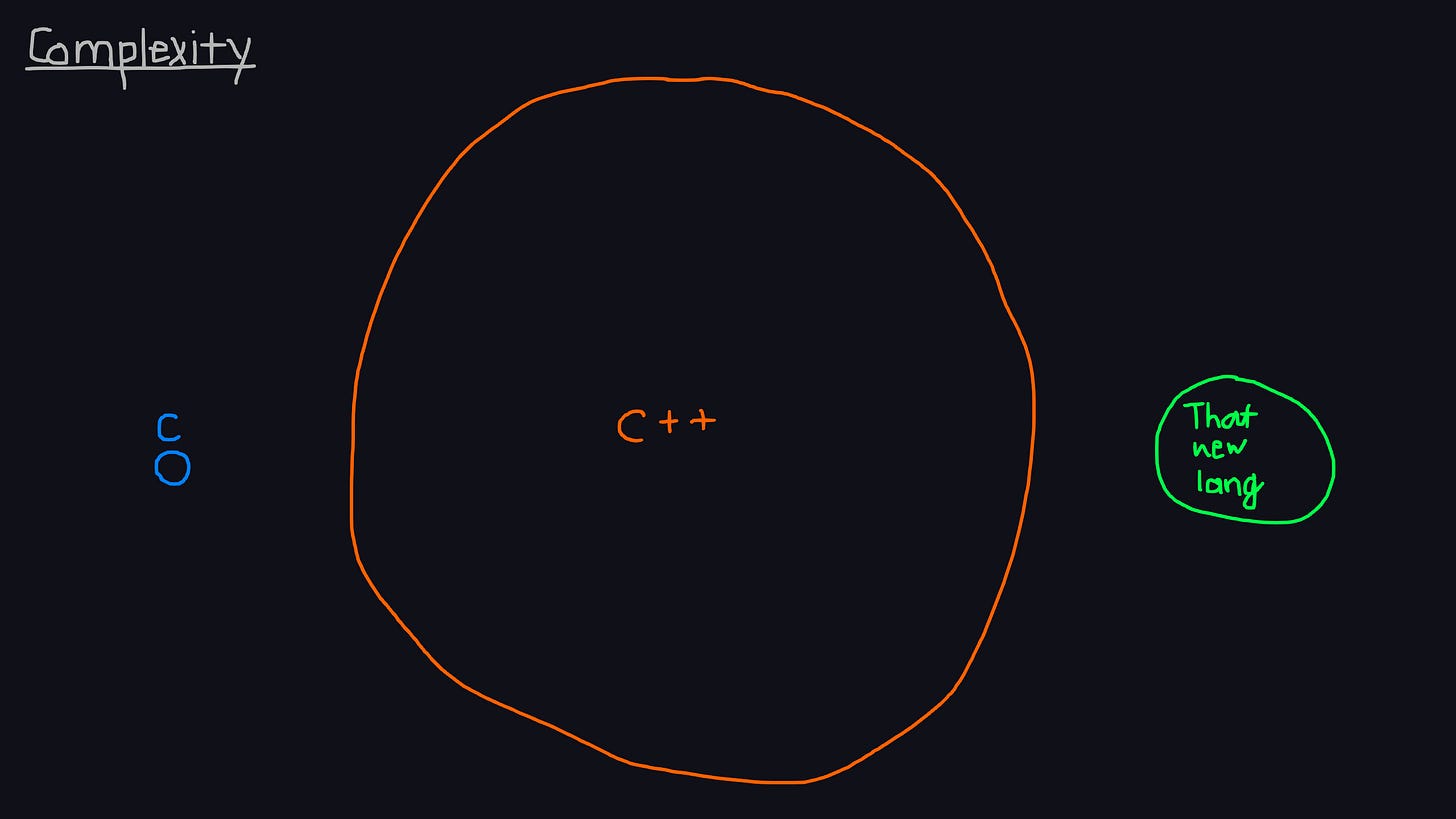

What I do care about is the future of programming and what an entirely new computing ecosystem looks like. I don’t want to be stuck with C as the foundational language forever. However, I do want to emphasize the importance of a simple toolchain. C, by itself, is already too complex. It must be simplified and stripped down. Most of the new “C-replacement” system languages—except for maybe JAI and Odin—are the opposite of this. They thought the problem with C is its lack of language features, and instead opt for big ideas that complicate the ecosystem, making all of its key strengths—fast iteration times, clear information flow, broad toolchain support, and sharp code analysis—harder to achieve.

By choosing to use these new, not-yet-mature languages, you are trading decades worth of tooling for a slightly cleaned-up version of C that might be nicer to type with some useful built-in features. Importantly, these features—and I hope to cover this in the following post—are not that hard to implement in C with metaprogramming, so the trade-off just isn’t worth it.

What is worth it, though, is to reevaluate the entire ecosystem, not just the programming language. What are some aspects of the ecosystem that we should focus on? What should a new system programming language in this new ecosystem look like? What are some concrete ideas for a simpler, better version of C? I’ll aim to answer these questions in the upcoming posts6.

In this series, I consider covering:

Different ways of writing parsers and metaprogramming systems, including experimental and untested ones.

What does an ideal system programming language look like? What are some of the current problems with modern languages?

Reconsider parts of the current ecosystem we take for granted. How can they be improved, and what might a better alternative look like?

If any of this sounds interesting to you, then consider subscribing!

-Trần Thành Long

ISO/IEC 9899:2018 for C17.

ISO/IEC 14882:2020 for C++20.

This is an area where JAI's CTE truly outshines all other modern languages. You can freely stack and nest as many #insert and #run directives as you like. Combined with the built-in build system, this makes it trivial to handle all sorts of tasks—sending packets to a build server, integrating with your VCS, waiting for user input to choose build settings, or even displaying information visually instead of as plain text. You can literally run Space Invaders inside it.

A simpler (but worse) solution is for the debugger to have an interpreter API.

In the meantime, I recommend reading "More Languages Won't Fix the Computing World."

Nice one! Waiting for the next post :)

What about the hardware and CPU/GPU optimizations? Sure, if you’re running on bare metal, a metaprogram that decides what to compile and when it probably a good idea. If you’re running code inside a Linux Docker image preset for an x64 architecture, you might be getting in the way of what the CPU on the actual box is trying to do and create bizarre behavior or get slowed performance as the chip, the OS, the toolchain, and your metaprogram fights out what’s FI and what’s FO.